SQL Scripts:SQL Performance issues from wasted buffer pool memory

The reason I’m interested in this, and you should be too, is that memory is one of the most important resources that SQL Server uses. If you don’t have enough memory, your workload will suffer because:

- You’ll be driving more read I/Os because more of the workload can’t fit in the buffer pool.

- You’ll be driving more write I/Os because the lazywriter will have to be tossing dirty pages from the buffer pool.

- You may encounter RESOURCE_SEMAPHORE waits because queries can’t get the query execution memory grants that they need.

- You may cause excessive plan recompilations if the plan cache is too constrained.

And a bunch of other things.

One of the memory problems that Kimberly discussed in depth last year (and teaches in depth in our Performance Tuning classes) is single-use plan cache bloat – where a large proportion of the plan cache is filled with single-use plans that don’t ever get used again. You can read about it in the three blog posts in her Plan Cache category, along with how to identify plan cache bloat and what you can do about it.

This post is about the memory the buffer pool is using to store data file pages, and whether good use is being made from it.

Tracking data density

The sys.dm_os_buffer_descriptors DMV gives the information stored by the buffer pool for each data file page in memory (called a BUFstructure in the code). One of the things that this structure keeps track of is the free_space_in_bytes for each page. This metric is updated in real-time as changes are made to the page in memory (you can easily prove this for yourself) and so is a reliable view of the data density of the used portion of the buffer pool.

Data density? Think of this as how packed full or data, index, or LOB rows a data file page is. The more free space on the page, the lower the data density.

Low data density pages are caused by:

- Very wide data rows (e.g. a table with a 5000-byte fixed-size row will only ever fit one row per page, wasting roughly 3000 bytes per page).

- Page splits, from random inserts into full pages or updates to rows on full pages. These kind of page splits result in logical fragmentation that affects range scan performance, low data density in data/index pages, and increased transaction log overhead (see How expensive are page splits in terms of transaction log?).

- Row deletions where the space freed up by the deleted row will not be reused because of the insert pattern into the table/index.

Low data density pages can be detrimental to SQL Server performance, because the lower the density of records on the pages in a table:

- The higher the amount of disk space necessary to store the data (and back it up).

- The more I/Os are needed to read the data into memory.

- The higher the amount of buffer pool memory needed to store the extra pages in the buffer pool.

Survey results

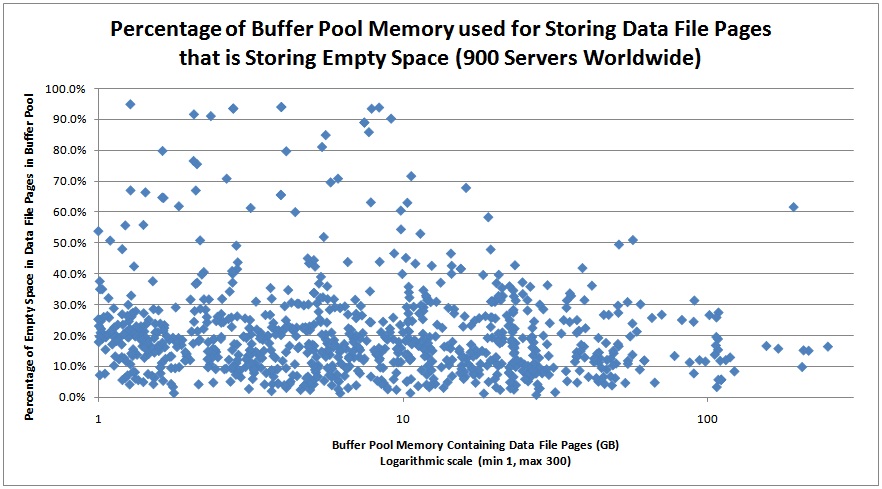

From the survey results I took all the SQL Servers that were using at least one GB of buffer pool memory for data file page storage (900 servers) and plotted that amount of memory against the percentage of that memory that was storing free space in the data file pages.

Wow! That’s a lot of servers with a lot of buffer pool memory storing nothing useful.

Low data-density solutions

So what can you do about it? There are a number of solutions to low page density including:

- Change the table schema (e.g. vertical partitioning, using smaller data types).

- Change the index key columns (usually only applicable to clustered indexes – e.g. changing the leading cluster key from a random value like a non-sequential GUID to a sequential GUID or identity column).>

- Use index FILLFACTOR to reduce page splits, and…

- Periodically rebuild problem indexes.

- Consider enabling data compression on some tables and indexes.

From the graph above, bear in mind that some of the ‘wasted’ space on these servers could be from proper index management where data and index pages have a low FILLFACTOR set to alleviate page splits. But I suspect that only accounts for a small portion of what we’re seeing in this data.

The purpose of my survey and this post is not to explain how to make all the changes to reduce the amount of free space being stored in memory, but to educate you that this is a problem. Very often PAGEIOLATCH waits are prevalent on systems because more I/O than necessary is being driven to the I/O subsystem because of things like bad plans causing table scans or low data density. If you can figure out that it’s not an I/O subsystem problem, then you as the DBA can do something about it.

Helpful code to run

Below is a script to analyze the buffer pool and break down by database the amount of space being taken up in the buffer pool and how much of that space is empty space. For systems with a 100s of GB of memory in use, this query may take a while to run:

SELECT

(CASE WHEN ([database_id] = 32767)

THEN N'Resource Database'

ELSE DB_NAME ([database_id]) END) AS [DatabaseName],

COUNT (*) * 8 / 1024 AS [MBUsed],

SUM (CAST ([free_space_in_bytes] AS BIGINT)) / (1024 * 1024) AS [MBEmpty]

FROM sys.dm_os_buffer_descriptors

GROUP BY [database_id];

GO

|

And here’s some sample output from a client system (made anonymous, of course):

DatabaseName MBUsed MBEmpty

------------------- -------- ---------

Resource Database 51 11

ProdDB 71287 9779

master 2 1

msdb 481 72

ProdDB2 106 17

model 0 0

tempdb 2226 140

|

EXEC sp_MSforeachdb

N'IF EXISTS (SELECT 1 FROM (SELECT DISTINCT DB_NAME ([database_id]) AS [name]

FROM sys.dm_os_buffer_descriptors) AS names WHERE [name] = ''?'')

BEGIN

USE [?]

SELECT

''?'' AS [Database],

OBJECT_NAME (p.[object_id]) AS [Object],

p.[index_id],

i.[name] AS [Index],

i.[type_desc] AS [Type],

--au.[type_desc] AS [AUType],

--DPCount AS [DirtyPageCount],

--CPCount AS [CleanPageCount],

--DPCount * 8 / 1024 AS [DirtyPageMB],

--CPCount * 8 / 1024 AS [CleanPageMB],

(DPCount + CPCount) * 8 / 1024 AS [TotalMB],

--DPFreeSpace / 1024 / 1024 AS [DirtyPageFreeSpace],

--CPFreeSpace / 1024 / 1024 AS [CleanPageFreeSpace],

([DPFreeSpace] + [CPFreeSpace]) / 1024 / 1024 AS [FreeSpaceMB],

CAST (ROUND (100.0 * (([DPFreeSpace] + [CPFreeSpace]) / 1024) / (([DPCount] + [CPCount]) * 8), 1) AS DECIMAL (4, 1)) AS [FreeSpacePC]

FROM

(SELECT

allocation_unit_id,

SUM (CASE WHEN ([is_modified] = 1)

THEN 1 ELSE 0 END) AS [DPCount],

SUM (CASE WHEN ([is_modified] = 1)

THEN 0 ELSE 1 END) AS [CPCount],

SUM (CASE WHEN ([is_modified] = 1)

THEN CAST ([free_space_in_bytes] AS BIGINT) ELSE 0 END) AS [DPFreeSpace],

SUM (CASE WHEN ([is_modified] = 1)

THEN 0 ELSE CAST ([free_space_in_bytes] AS BIGINT) END) AS [CPFreeSpace]

FROM sys.dm_os_buffer_descriptors

WHERE [database_id] = DB_ID (''?'')

GROUP BY [allocation_unit_id]) AS buffers

INNER JOIN sys.allocation_units AS au

ON au.[allocation_unit_id] = buffers.[allocation_unit_id]

INNER JOIN sys.partitions AS p

ON au.[container_id] = p.[partition_id]

INNER JOIN sys.indexes AS i

ON i.[index_id] = p.[index_id] AND p.[object_id] = i.[object_id]

WHERE p.[object_id] > 100 AND ([DPCount] + [CPCount]) > 12800 -- Taking up more than 100MB

ORDER BY [FreeSpacePC] DESC;

END';

|

And here’s some sample output from the same client system with the more comprehensive script:

Database Object index_id Index Type TotalMB FreeSpaceMB FreeSpacePC

-------- ------ -------- ------------ ------------ ------- ----------- -----------

ProdDB TableG 1 TableG_IX_1 CLUSTERED 531 130 24.5

ProdDB TableI 1 TableI_IX_1 CLUSTERED 217 48 22.2

ProdDB TableG 2 TableG_IX_2 NONCLUSTERED 127 27 21.8

ProdDB TableC 1 TableC_IX_1 CLUSTERED 224 47 21.4

ProdDB TableD 3 TableD_IX_3 NONCLUSTERED 1932 393 20.4

ProdDB TableH 1 TableH_IX_1 CLUSTERED 162 33 20.4

ProdDB TableF 5 TableF_IX_5 NONCLUSTERED 3128 616 19.7

ProdDB TableG 9 TableG_IX_9 NONCLUSTERED 149 28 19.1

ProdDB TableO 10 TableO_IX_10 NONCLUSTERED 1003 190 19

ProdDB TableF 6 TableF_IX_6 NONCLUSTERED 3677 692 18.8

|

This is cool because it’s a lot less intrusive way to figure out which tables and indexes have data density issues than runningsys.dm_db_index_physical_stats (see this post for an in depth view of this DMV). You can mess around with the script to make it create a table to hold all the data for all databases and slice+dice however you want. I have a much more comprehensive script that I use on client systems but this one will provide you with lots of relevant data.