Monitoring SQL Server database transaction log usage

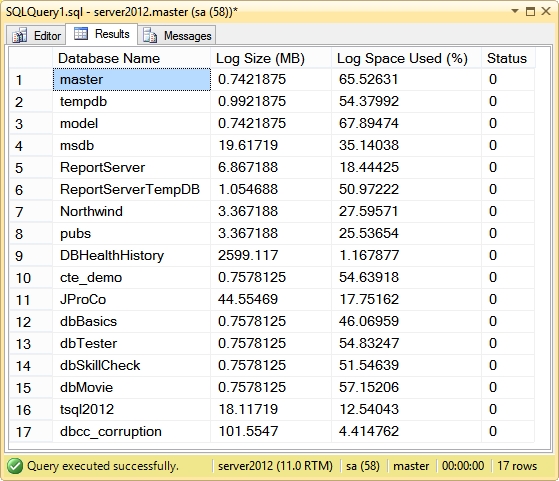

In this post I will provide a method for monitoring your database transaction logs using DBCC SQLPERF(logspace), which can be used to return transaction log space usage statistics for your databases. We know there are many different causes for the transaction logs to expand. It could be a very large or long-running transaction like index maintenance, or even a data archival routine (with lots of deletes). You may also be using a FULL Recovery Model, but you aren't performing transaction log backups... or you're not performing them as frequently as you should.

There are many causes for log growth, and you should be aware of that expansion so that you can act before a problem occurs. This tip is a good way to keep an eye on that transaction log growth and utilization.

First we need a table to store the output of DBCC SQLPERF(logspace). We will use this table to store log use statistics over time. This will enable you to analyze historically, which will be helpful for trending and diagnosing database and log growth.

CREATE TABLE dbo.MonitorLogs (

LogID INT PRIMARY KEY IDENTITY(1,1),

LogDate DATETIME NOT NULL DEFAULT(GETDATE()),

DBName VARCHAR(100) NOT NULL,

LogSizeMB DECIMAL(18, 4) NOT NULL,

LogSpaceUsed DECIMAL(18, 4) NOT NULL,

LogStatus INT NOT NULL

)

Now this is how we will execute the DBCC statement, directing the output to our new table:

INSERT dbo.MonitorLogs (DBName,LogSizeMB,LogSpaceUsed,LogStatus)

EXEC ('DBCC SQLPERF(logspace)')

INSERT dbo.MonitorLogs (LogDate,DBName,LogSizeMB,LogSpaceUsed,LogStatus)

SELECT DATEADD(d,-1,LogDate),DBName,LogSizeMB,LogSpaceUsed,LogStatus

FROM dbo.MonitorLogs

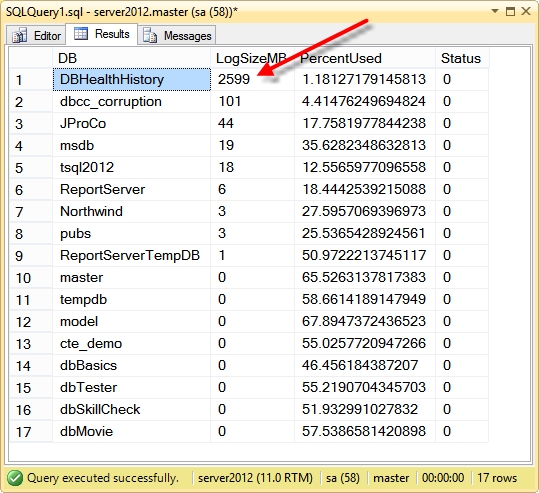

Check the data, you will now see statistics from yesterday as well. You will use this last statement to compare yesterday's data with today's. The principal is just the monitoring of your transaction log space over time.

DECLARE @Yesterday DATE = CONVERT(DATE, GETDATE()-1);

DECLARE @Today DATE = CONVERT(DATE, GETDATE());

SELECT

COALESCE(b.DBName,a.DBName) [Database],

a.LogSizeMB [TodayLogSizeMB],

b.LogSizeMB [YestLogSizeMB],

a.LogSizeMB - b.LogSizeMB [SizeMBDiff],

a.LogSpaceUsed [TodayLSpaceUsed],

b.LogSpaceUsed [YestLSpaceUsed],

a.LogSpaceUsed - b.LogSpaceUsed [UsedDiff]

FROM

dbo.MonitorLogs a FULL OUTER JOIN dbo.MonitorLogs b

ON a.DBName = b.DBName

WHERE

CONVERT(DATE, a.LogDate) = @Yesterday

AND CONVERT(DATE, b.LogDate) = @Today

ORDER BY

TodayLogSizeMB DESC

This is just one way to get the job done. In the newer releases you should take a look at both of these DMVs:

sys.dm_tran_database_transactions http://msdn.microsoft.com/en-us/library/ms186957.aspx

sys.dm_tran_active_transactions http://msdn.microsoft.com/en-us/library/ms174302.aspx