Automating daily transaction log reading

One of the common questions our customers have about auditing is – how to automate transaction log reading and have all the information you might need at hand. Another common request is – how to save the transactions I see in the ApexSQL Log grid, so I can easily filter, group, order and query them without running the whole ApexSQL Log reading process again? In case of large transaction logs, the reading process may take a long time, and I want to avoid running it each time I want to see the transactions

There’s no out-of-the-box solution for these requests. What you can do is read the transaction logs and store the transactions read in SQL tables in advance, so you can query the transactions just like any other SQL data

This is where the ApexSQL Log feature to export transactions into SQL or SQL bulk scripts can help

Export to SQL bulk creates three files. When executed, they insert the transactions read from the transaction logs into the APEXSQL_LOG_OPERATION_DETAIL and APEXSQL_LOG_OPERATION tables

The files created are:

- SQL that creates the APEXSQL_LOG_OPERATION_DETAIL and APEXSQL_LOG_OPERATION tables, and contains BULK INSERT commands. By default, its named export.sql

BULK INSERT APEXSQL_LOG_OPERATION FROM 'E:\test\Operations_05-16-2013.sql' WITH(DATAFILETYPE = 'widechar') BULK INSERT APEXSQL_LOG_OPERATION_DETAIL FROM 'E:\test\Details_05-16-2013.sql' WITH(DATAFILETYPE = 'widechar') GO - A bulk file for the APEXSQL_LOG_OPERATION table – by default named exportOperations, contains the information about the operation type, object affected, schema name, user name, etc. This is the information shown in the upper part of the ApexSQL Log grid

- A bulk file for the APEXSQL_LOG_OPERATION_DETAIL table – by default named exportDetails, contains the LSN, column name and type, old and new values. This is the information shown on the ApexSQL Log Operation details tab

We recommend running ApexSQL Log every night, reading the transactions that happened in the last 24 hours, creating SQL BULK and immediately executing it to store the read transactions into SQL tables. This way even if you don’t keep the old transaction log backups, you will have their content stored in 2 SQL tables

- Create a batch file using the statement in this format:

- The next step is to schedule the execution of this batch using SQL Server jobs Start SQL Server Management Studio and create a new job

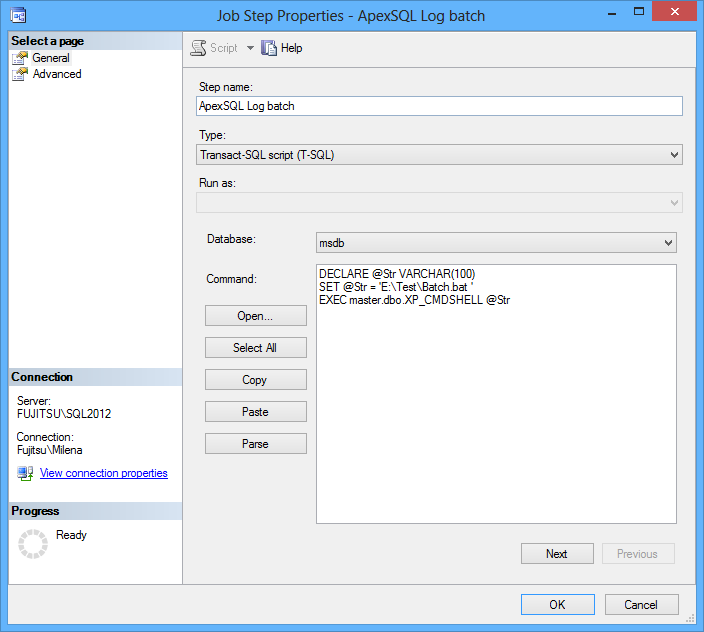

- Specify the job name and add steps. In the first step, paste the following:

DECLARE @Str VARCHAR(100) SET @Str = 'E:\Test\Batch.bat ' EXEC master.dbo.XP_CMDSHELL @Str

- Add the following SQL to the second step

DECLARE @SQLStatement VARCHAR(2000) SET @SQLStatement = 'sqlcmd -S Fujitsu\SQL2012 -d AdventureWorks2012 -i E:\test\SQLBulk_' + CONVERT(nvarchar(30), GETDATE(), 110) +'.sql' EXECUTE master.dbo.xp_cmdshell @SQLStatement

- Create a new schedule for the job, and specify its frequency and the time when it starts

- Right-click the job, select Start Job at Step, and select the first step to test the job

“<ApexSQL Log installation path>\ApexSQLLog.com” /server:<server name> /database:<database name> /trusted /ec:begintime endtime lsn operation schema object transactionid user /bulk:<file1 path and name>.sql :<file2 path and name>.sql:<file3 path and name>.sql /operations:DMLALL DDLALL /time_period:24hours /backup:<transaction log backups path and names> /db_backup: :<database backups path and names> /v /f

The /backup and /db_backup switches support wildcards, and you can leverage that with the Command Line expansion to add to the auditing process only those transaction log and database backups created during the day

To add to the auditing process only those .trn files with names that start with e.g. AW2012_0516, such as

use

/backup:E:\Test\Backup\AW2012_%date:~4,2%%date:~7,2%*.trn

To add only the full database backups created during the day, with names that start with, for example, AW2012_0516, such as J, AW2012_05162013_0100.bak, use:

/db_backup:E:\Test\Backup\AW2012__%date:~4,2%%date:~7,2%*.bak

For instance, to read the transactions that happened in the last 24 hours in the AdventureWorks2012 database on the SQL Server instance Fujitsu\SQL2012, add only the backups created during the day, and create files in the E:\test folder that contain the creation date in their names:

“C:\Program Files\ApexSQL\ApexSQLLog2011test1088\ApexSQLLog.com” /server:FUJITSU\SQL2012 /database:AdventureWorks2012 /trusted /ec:begintime endtime lsn operation schema object transactionid user /bulk:E:\test\SQLBulk_%date:~4,2%-%date:~7,2%-%date:~10,4%.sql E:\test\Operations_%date:~4,2%-%date:~7,2%-%date:~10,4%.sql E:\test\Details_%date:~4,2%-%date:~7,2%-%date:~10,4%.sql /operations:DMLALL DDLALL /time_period:24hours /v /f /backup:E:\Test\Backup\AW2012_%date:~4,2%%date:~7,2%*.trn /db_backup:E:\Test\Backup\AW2012__%date:~4,2%%date:~7,2%*.bak

Keep in mind that the selected transaction log and database backups must form a full chain

Save the batch file with the bat extension, for example E:\Test\batch.bat

It will use the Command Shell to execute the created SQL file and BULK insert transactions into SQL tables

If the job is executed successfully, you’re all set

Reading database transaction logs doesn’t have to take up any of your time. Schedule the reading to occur every night – have ApexSQL Log read all transaction log backups and full database backups created during the day, filter them for the last 24 hours, and insert the transactions into the APEXSQL_LOG_OPERATION and APEXSQL_LOG_OPERATION_DETAIL tables. Then when you arrive to your office in the morning, the transactions stored in the SQL tables will be awaiting for your queries